Jaron Lanier: an unlikely prophet of the digital age

In an interview with Wired magazine (12/12/18), Geoff Hinton, a cognitive psychologist and head of Google’s artificial intelligence research team, fielded a question about the ethical challenges soon to be posed by technologies running on A.I. The A.I. controlling, say, a driverless car will sooner or later have to make a choice (if that is the right word for it) about which way to swerve when it loses control of the steering: toward the park over there, placing pedestrians and joggers at risk? Or toward an embankment on the other side of the road, placing the car’s own passengers at greater risk? A morally dependable A.I. would have to behave a lot like the human brain:

People can’t explain how they work, for most of the things they do. When you hire somebody, the decision is based on all sorts of things you can quantify, and then all sorts of gut feelings. People have no idea how they do that. If you ask them to explain their decision, you are forcing them to make up a story.

Neural nets have a similar problem. When you train a neural net, it will learn a billion numbers that represent the knowledge it has extracted from the training data. If you put in an image, out comes the right decision, say, whether this was a pedestrian or not. But if you ask, “Why did it think that?” Well, if there were any simple rules for deciding whether an image contains a pedestrian or not, it would have been a solved problem ages ago.

There is a level at which, Hinton seems to argue, the brain cannot be fully understood. Any attempt to fully explain the gut feelings, moral hunches, snap judgments and other quick reactions of the brain would be futile, or a made-up story. The volume and intricacy of these cognitive operations are so great that a complete account of them would be like a map with the same area as the territory it covers.

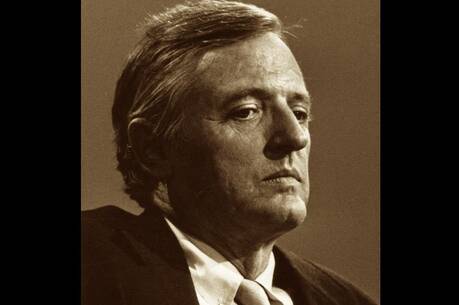

Hinton’s statement would not surprise Jaron Lanier, an interdisciplinary scientist at Microsoft, who in his latest book, Ten Arguments for Deleting Your Social Media Accounts Right Now, writes: “AI is a fantasy, nothing but a story we tell about our code.” This is an exaggeration, but Lanier has been trying to get his point across for almost 20 years: Human beings are special in a way that computers, even A.I., are not. If we miss this point, Lanier says, we will create bad technology. Lanier’s writing career spans from seminal early essays—“Digital Maoism” and “One Half a Manifesto”—through five books that have shaped the conversation regarding the Internet, A.I. and human nature.

Most people in tech, Lanier believes, have not reached the same conclusion as Geoff Hinton, and even Hinton himself might not totally understand the importance of what he has said. There could be high costs for thinking of consciousness in terms of computing, rather than the other way around. For example, Lanier argues that thinking about human consciousness in terms of computing has lead to a diminished appreciation of the importance of individual authorship.

In his second book, You Are Not a Gadget (2010), Lanier quotes Kevin Kelly, executive editor of Wired magazine, who has argued that there is a “moral imperative” to meld all the world’s books into “one book,” wikified and online. This idea horrifies Lanier. If implemented, the “one book” would crush the individual author amid “the digital flattening of expression into a global mush.” The cost would be freedom of thought: “any singular, exclusive book, even the collective one accumulating in the cloud, will become a cruel book if it is the only one available.” What Lanier ultimately fears is the rise of a tech-driven collectivist ideology that drowns out the individual mind. He gives several names to this fear, like “digital Maoism,” “cybernetic totalism,” “computationalism” and—in Ten Arguments—“social media.”

Pavlovian Response

Ten Arguments is a less speculative book than Gadget. Less than 150 pages long and consisting of numbered arguments, it is a cross between a political pamphlet and a manifesto. Most of these arguments are founded on the idea that all forms of existing social media treat human beings as if they were basically a bundle of nerve endings. These nerve endings behave in relatively predictable ways when bombarded with certain stimuli. Lanier defines social media through an acronym, BUMMER, which stands for “Behaviors of Users Modified, and Made into an Empire for Rent.” Our behavior is modified by algorithms so that it generates clicks, and clicks generate profit. In other words, social media treats us like Pavlov’s dog, and if you spend enough time there, you will become the dog.

The fact that negative emotions are the ones most easily and reliably generated by social media means that its algorithms will push to inspire them.

In his first argument, “You are losing your free will,” Lanier argues that social media algorithms attempt to generate addiction in their users by applying behaviorist techniques, and that these techniques in turn are very amenable to the binary mode of thinking that is characteristic of computing. “There’s something about the rigidity of digital technology, the on-and-off nature of the bit, that attracts the behaviorist way of thinking. Reward and punishment are like one and zero,” Lanier writes. In fact, programmers have harnessed the power of reward-and-punishment by encouraging addiction: “Social media is biased, not to the Left or the Right, but downward. The relative ease of using negative emotions for the purposes of addiction and manipulation makes it relatively easier to achieve undignified results.” These claims are not controversial; as Lanier himself notes, many people who have worked in social media (like Sean Parker, former president of Facebook) have made similar arguments.

The fact that negative emotions are the ones most easily and reliably generated by social media means that its algorithms will push to inspire them. BUMMER thus becomes “a style of business plan that spews out perverse incentives and corrupts people.” From here follow many of the other arguments for quitting social media: It causes a sort of “insanity” (Argument Two); it gives us an incentive to become trolls and harass others (Argument Three); it makes us depressed (Argument Seven); it hurts our capacity to empathize with others by promoting a default adversarial attitude (Argument Six). Lanier also critiques social media’s economic and political impacts in Arguments Eight and Nine, and a longer, fuller version of his arguments is found in his 2013 book, Who Owns the Future?

All these arguments are something of a prologue to a coruscating finale, Argument Ten: “Social Media Hates Your Soul.” BUMMER amounts to nothing short of a new religion, one responsible for “metaphysical imperialism.” “If you use BUMMER… [you have] effectively renounced what you might think is your religion, even if that religion is atheism.” Instead, you have adopted BUMMER’s new conception of “what it means to be a person.”

BUMMER diminishes our free will by conditioning our behavior; it tricks us, cult-like, into believing that the good aspects of technology would not be able to exist without it. It increases mob-like and antisocial behavior through its behavioral schemes and defines truth for us by having us read and watch only what its algorithms tell us we should want to read and watch. Moreover, Lanier believes that the increasingly popular notion that A.I. will eventually surpass human intelligence in power and scope, and that the riddle of death will somehow be solved along with it—what is now known as the Singularity—means that BUMMER tech and its promoters will have less loyalty to “present-day humans” than to “future AIs.”

Unlike previous critics of technology, Lanier is positively enthusiastic in his conviction that technology can serve human happiness and freedom.

Liberation Technology

Lanier arrived in Silicon Valley in the early 1980s, just as its ideas and inventions were poised to change the world. Lanier was part of that change, too—he made his name primarily in the field of virtual reality, his abiding passion. But he eventually woke up, sometime in the 1990s, to discover himself spiritually at odds with the tech culture he helped create—not because he fell out of love with technology and invention. Quite the contrary.

Unlike previous critics of technology, like Jacques Ellul, Neil Postman or Martin Heidegger, Lanier is positively enthusiastic in his conviction that technology can serve human happiness and freedom. He places a great deal of hope in virtual reality, which he defines as an opposite idea to A.I., because it is “the technology that...highlights the existence of your subjective experience. It proves you are real.” It is a medium “potent for beauty.” It seems futuristic, but in fact is already commonplace in the worlds of entertainment, automotive engineering and medicine. Lanier is not a Luddite; he is a liberation technologist.

Lanier’s personal history is one of eclectic experiences and realities. He is an accomplished musician who specializes in playing rare and forgotten instruments; he paid for college courses (as a teenager) by raising goats and making cheese; he worked as a graduate-level teaching assistant in math courses at New Mexico State University at age 17; he spent much of his youth on the road as something of a bohemian, including a stint as an antinuclear activist; he hung out at Cal Tech (where he was not enrolled) with the physicist Richard Feynman, as well as in New York, playing music with John Cage.

Lanier also suffered greatly as a child. The son of Holocaust survivors, he lost his mother during his childhood in a car accident. Lanier’s family history has also contributed to his singular combination of American adventurism and healthy disregard for established academic authority, coupled with Old World education and sensibility. Lanier’s maternal grandfather, a rabbi, was an associate of the Jewish philosopher Martin Buber, and Buber’s influence is detectable in Lanier’s thought. Lanier’s mother, a painter and pianist trained in Vienna, even after being chased out of Europe by the Nazi ideology that had possessed her countrymen, taught Lanier to appreciate and preserve “the good in Europe.”

In any case, Lanier’s unique genius is not what alienated him from the tech milieu. As he notes in his delightful 2017 memoir, Dawn of the New Everything: Encounters With Reality and Virtual Reality, there were many free spirits like him in Silicon Valley in the 1980s. Lanier found himself at odds with Silicon Valley when he started noticing the emergence of an ideology that, he feared, threatened the very quirkiness, strangeness, passion and wandering nature of human existence—that is, an ideology that, through optimization, surveillance and other forms of control, threatened the life of a free spirit.

More specifically, Lanier was at odds with the idea that the primary task in tech research should be to reverse-engineer the human brain, as if the brain is a computer that can be broken up into its component parts. This is the view Lanier claims is becoming the norm. If the brain is a computer, consciousness is data and you and I are merely gadgets, then ultimately we will become obsolete through optimization, secondary in importance to the machines that will overtake us. No more random road trips, time wasted fiddling with ancient instruments or bull sessions about the nature of the universe.

Mind Over Machine

To fight the “new religion,” Lanier insists that we recognize the superiority of the mind over the machine. As he puts it in Gadget: “If a machine can be [said to be] conscious, then the computing cloud is going to be a better and far more capacious consciousness than is found in an individual person. If you believe this, then working for the benefit of the cloud over individual people puts you on the side of the angels.” But really, it would put you on the side of the demons.

If the machines do overtake us, however, it will not because they are somehow superior, but because—and this is Lanier’s big point—we chose this fate for ourselves. “When you live as if there’s nothing special, no mystical spark inside you,” he writes in Ten Arguments, “you gradually start to believe it.” So don’t. Social media is driving us crazy, taking away our freedom. Delete your account.

But does Lanier see that we need more than the mere will to believe that human beings are special in order to really believe it? “Consciousness is the only thing that isn’t weakened if it’s an illusion,” he claims. The pragmatic argument—let us believe in X, even if it is an illusion, because not believing in X will lead to disaster—sounds good only in theory. In practice, we need deep reasons to sustain the belief that human life contains a “mystical spark.”

Philosophy also provides a language for dialogue with those technologists Lanier disagrees with.

Here is where “the good in Europe” comes in. Western philosophy (which extends beyond Europe) has resources for thinking about human nature in ways that go beyond behaviorism. It includes thinkers who made robust claims about how human thought transcends computing: how it grasps eternal truths (such as mathematical principles), and makes evaluations (about goodness or beauty). The debate over the nature of consciousness is not new: While many historians trace the origins of the debate to 17th-century thinkers like Locke and Descartes, it actually goes as far back as Plato’s Phaedo, where Socrates argues that any full account of human existence requires a concept of morality and not only biology.

Philosophy also provides a language for dialogue with those technologists Lanier disagrees with. Ironically, ancient philosophers would understand the longing for immortality better than some representatives of modern thought, such as Freud or Marx, who would dismiss it as neurosis or false consciousness. All living creatures, Aristotle wrote in On the Soul, “stretch out” toward “that which always is, and is divine.” Peter Thiel, PayPal founder and a venture capitalist, has engaged in just this type of dialogue with the theologian N.T. Wright, comparing the claims of the Judeo-Christian tradition with “tech optimism.”

Lanier has cleared the ground for a renewed account of human nature. Hopefully, others will follow his lead.

This article also appeared in print, under the headline “An Unlikely Prophet,” in the Fall Literary Review 2019, issue.