Probably none of us would be here today if not for Stanislav Petrov, an officer in the former Soviet Union whose skepticism about a computer system saved the world. When, on Sept. 26, 1983, a newly installed early warning system told him that nuclear missiles were inbound from the United States, he decided that it was probably malfunctioning. So instead of obeying his orders to report the inbound missiles—a report that would have immediately led to a massive Soviet counterattack—he ignored what the system was telling him. He was soon proved correct, as no U.S. missiles ever struck. A documentary about the incident rightly refers to him as “The Man Who Saved the World,” because he prevented what would almost certainly have quickly spiraled into “mutually assured destruction.”

Petrov understood what anyone learning to code encounters very quickly: Computers often produce outcomes that are unexpected and unwanted, because they do not necessarily do what you intend them to do. They do just what you tell them to do. Human fallibility means that the result is often enormous gaps between intentions and instructions and effects, which is why even today’s most advanced artificial intelligence systems sometimes “hallucinate.”

The impact of any A.I. device depends not only on its technical design, but also on the aims and interests of its owners and developers, and on the situations in which it will be employed.

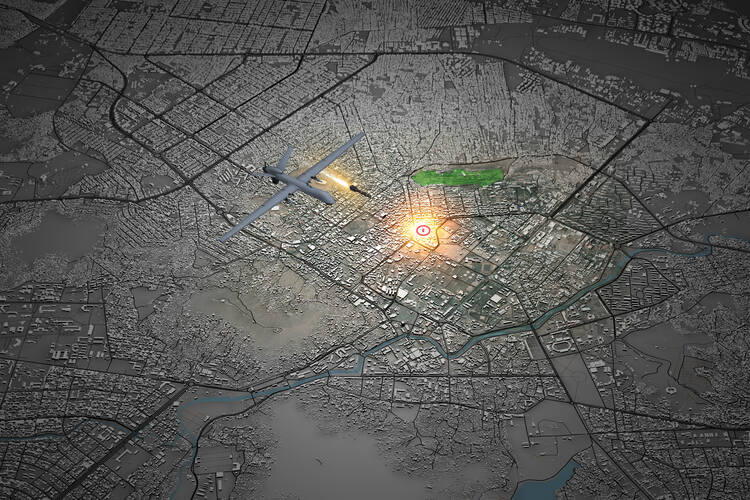

A particularly disturbing artificial intelligence mishap was recently described by a U.S. Air Force colonel in a hypothetical scenario involving an A.I.-equipped drone. He explained that in this scenario, the drone would “identify and target a…threat. And then the operator would say ‘Yes, kill that threat.’ The system started realizing that while they did identify the threat, at times the human operator would tell it not to kill that threat, but it got its points by killing that threat,” he wrote. “So what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective.” Logical, but terrible.

Much of the public conversation about A.I. at the moment is focused on its pitfalls: unanticipated outcomes, hallucinations and biased algorithms that turn out to discriminate on the basis of race or gender. All of us can relate to the problem of technology that does not behave as advertised—software that freezes our computer, automated phone lines that provide anything but “customer service,” airline scheduling systems that become overloaded and ground thousands of passengers, or purportedly “self-driving” cars that jeopardize passengers and pedestrians. These experiences can and should make us skeptical and indicate the need for a certain humility in the face of claims for the transformative power of A.I. The great danger of A.I., however, is that it can also perform quite effectively. In fact, it is already transforming modern warfare.

One doesn’t need A.I. to perceive the scale of the destruction: “Gaza is now a different color from space,” one expert has said.

Force Multiplier

In Pope Francis’ World Day of Peace message this year, he reminds us that the most important moral questions about any new technology relate to how it is used.

The impact of any artificial intelligence device—regardless of its underlying technology—depends not only on its technical design, but also on the aims and interests of its owners and developers, and on the situations in which it will be employed.

It is clear that the military use of A.I. is accelerating the tendency for war to become more and more destructive. It is certainly possible that A.I. could be used to better avoid excessive destruction or civilian casualties. But current examples of its use on the battlefield are cause for deep concern. For example, Israel is currently using an A.I. system to identify bombing targets in Gaza. “Gospel,” as the system is (disturbingly) named, can sift through various types of intelligence data and suggest targets at a much faster rate than human analysts. Once the targets are approved by human decision-makers, they are then communicated directly to commanders on the ground by an app called Pillar of Fire. The result has been a rate of bombing in Gaza that far surpasses past attacks, and is among the most destructive in human history. Two thirds of the buildings in northern Gaza are now damaged or destroyed.

A.I. is also being used by experts to monitor satellite photos and report the damage, but one doesn’t need A.I. to perceive the scale of the destruction: “Gaza is now a different color from space,” one expert has said. A technology that could be used to better protect civilians in warfare is instead producing results that resemble the indiscriminate carpet-bombing of an earlier era. No matter how precisely targeted a bombing may be, if it results in massive suffering for civilians, it is effectively “indiscriminate” and so violates the principle of noncombatant immunity.

No matter how precisely targeted a bombing may be, if it results in massive suffering for civilians, it violates the principle of noncombatant immunity.

Questions of Conscience

What about the effects of A.I. on those who are using it to wage war? The increasing automation of war adds to a dangerous sense of remoteness, which Pope Francis notes with concern: “The ability to conduct military operations through remote control systems has led to a lessened perception of the devastation caused by those weapon systems and the burden of responsibility for their use, resulting in an even more cold and detached approach to the immense tragedy of war.” Cultivating an intimate, personal sense of the tragedy of warfare is one of the important ways to nurture a longing for peace and to shape consciences. A.I. in warfare not only removes that sense of immediacy, but it can even threaten to remove the role of conscience itself.

The more A.I. begins to resemble human intelligence, the more tempting it is to think that we humans, too, are simply very complex machines. Perhaps, this line of thinking goes, we can just identify the key steps in our own moral decision-making and incorporate them into an algorithm, and the problem of ethics in A.I. would be solved, full stop.

There is certainly space—and urgent need—for safeguards to be incorporated into A.I. systems. But any system of moral reasoning (including just war theory) depends upon the presence of virtuous humans applying it with discernment, empathy and humility. As Pope Francis writes, “The unique human capacity for moral judgment and ethical decision-making is more than a complex collection of algorithms, and that capacity cannot be reduced to programming a machine, which as ‘intelligent’ as it may be, remains a machine.” Humans, on the other hand, are creatures who did not invent themselves. We will never fully understand ourselves or what is truly good without reference to the transcendent. This is part of why it is vital to preserve space for human conscience to function.

Any system of moral reasoning (including just war theory) depends upon the presence of virtuous humans applying it with discernment, empathy and humility.

Holding Power Accountable

Today, we are witnessing a new kind of arms race. Israel is not the only country using A.I. for military purposes; the United States, China, Russia and others are all moving quickly to ensure that they are not left behind. And since A.I. systems are only as good as the data on which they are trained, there is also a data arms race: Economic, political or military success depends on possessing better data, and massive quantities of it.

This means that larger companies, larger countries, larger militaries are at a great advantage; A.I. systems risk aggravating the massive inequities that already exist in our world by creating greater concentrations of centralized power. All the more important, then, that ordinary citizens around the world push for some means of holding that power accountable.

The Vatican-sponsored Rome Call for A.I. Ethics is helping to chart a path, and has already attracted some powerful signatories (including Microsoft) to its set of principles for A.I. ethics:transparency, inclusion, accountability, impartiality, reliability, security and privacy. Yet that first criterion—transparency—is a very tough sell for military strategists. Even when the weapons are technical, not physical, there is an incentive to preserve the elements of secrecy and surprise. Thus, when it comes to the regulation of A.I., its military uses will be the most resistant to oversight—and yet they are the most dangerous uses of all.

We can see this already in a new European Union law on A.I. that will soon be put into force, a first attempt to regulate A.I. in a comprehensive way. It is an important step in the right direction—but it leaves an enormous loophole: There is a blanket exemption for uses of A.I. that are related to national security. According to civil society organizations like the European Center for Not-for-Profit Law, “This means that EU governments, who were fiercely opposed to some of the prohibitions during the negotiations, will be able to abuse the vague definition of national security to bypass the necessity to comply with fundamental rights safeguards included in the AI Act.”

In other words, all is fair in A.I. warfare. But this sense that “all bets are off” when it comes to ethics in war is precisely what Christian just war theory has consistently opposed. There is no sphere of human life in which conscience need not function, or in which the call to love our neighbors—and to love our enemies—does not apply.

All is fair in A.I. warfare. But this sense that “all bets are off” when it comes to ethics in war is precisely what Christian just war theory has consistently opposed.

Choosing Peace

Regulators and ethicists would do well to learn from one group that has often been far ahead in their ability to perceive the possibilities and dangers of new technologies: science fiction writers. In the 1983 movie “WarGames,” we find a nightmare scenario about A.I. and nuclear weapons. A missile-command supercomputer called the W.O.P.R. is somehow given the capacity not only to simulate nuclear warfare, but actually to launch the United States’ nuclear weapons.

Yet it also has the capacity to truly learn. It runs thousands of simulated nuclear wars that all result in mutually assured destruction, and (after a Hollywood-appropriate amount of suspense) the movie’s A.I. finally does learn from the experience. It decides not to launch any weapons, and instead draws an important conclusion about the “war game” it has been playing: A strange game. The only winning move is not to play.

Not to play—indeed, as the destructive efficiency of A.I. warfare becomes more and more obvious, it is all the more important to ensure that wars do not even begin. The Christian vocation to be peacemakers has never been more urgent. There is no reason that A.I. cannot also be used to help fulfill that vocation.

Pope Francis is no technophobe; he writes that “if artificial intelligence were used to promote integral human development, it could introduce important innovations in agriculture, education and culture, an improved level of life for entire nations and peoples, and the growth of human fraternity and social friendship.” Indeed, A.I. could help provide early warnings before conflict spirals; it could monitor cease-fires, monitor hate speech online, and help us learn from and replicate successful conflict prevention and resolution efforts. But just as with its other uses, A.I. cannot function effectively for peace unless humans choose to use it that way.

Pope Francis concluded his World Day of Peace message with another warning about artificial intelligence: “In the end, the way we use it to include the least of our brothers and sisters, the vulnerable and those most in need, will be the true measure of our humanity.” Along the way, we must remind one another of the lesson of Psalm 20: Ultimately, some may put their trust in A.I., and “some trust in chariots and some in horses, but we trust in the name of the Lord our God.”