How you feel about the current, very public argument between Apple and the F.B.I. probably turns—as so many situations do—on how you frame the question. But neither of these questions gets to the deepest problem at stake in this issue.

The first version:

- Should Apple help the F.B.I. access data from the San Bernardino shooter’s iPhone?

The second version:

- Should the government be able to force Apple to produce a broken, less secure version of its operating system so that the F.B.I. can hack the San Bernardino shooter’s iPhone?

The problem is that both of these questions are reasonable descriptions of what Apple and the F.B.I. are arguing over. The bigger problem is that they are both fundamentally temporary questions. Effectively unbreakable encryption is possible, and could have been used even in this case. Its availability will only become more widespread. Whatever the legal resolution of this case, we also need to think about how we will balance civil rights and security concerns going forward, recognizing that both terrorists and common criminals can make use of encryption that we cannot break.

No one debates that Apple is technically capable, in this case, of helping the F.B.I. gain access to the data on the phone. If you are principally interested in the outcome of this particular case and whether or not the civil authorities should be able to act on this data, then the answer is clear—and you’re looking at the problem from the first question’s angle.

The other version of the question, though it’s tempting to skip over this point because it delves into technical details regarding encryption, focuses on the way Apple would assist the F.B.I. It involves producing a modified version of iOS, the operating system for the iPhone, in which several important security features have been disabled or short-circuited. With that modified software, the F.B.I. would have an unobstructed and optimized ability to try every possible four-digit passcode in order to access the iPhone. If you are concerned about the precedent that’s being set with such a tool, and about the level of privacy that should be possible in general on our electronic devices—then you’re looking at this from the second question’s angle.

Another way of breaking these questions down has to do with how much trust you have in our ability to control access to technical capabilities, once they exist. If you generally trust the government to use its surveillance powers in a limited way and only in accord with warrants and court orders, you’ll probably feel comfortable with giving the government a tool which allows them, in principle, to hack any iPhone. But, on the other hand, if you are concerned about the government exceeding its surveillance powers and ability to control usage of a tool like this if it’s widely distributed (or falls into the hands of hackers, or is legally compelled from Apple in another country, say China), then you might prefer that the tool never get created at all. Or if you’re concerned about the F.B.I. already having another 12 iPhones they want to crack open, or the Manhattan district attorney having 175, which are related to criminal cases but not to terrorism, you might want to make sure that the government’s surveillance powers remain more limited.

Ultimately, I come down on Apple’s side of this argument—but not because I innately distrust the government, nor because I am in the bag for Apple. I’m against compelling Apple to produce a crackable version of iOS because the proposed gain is too small, in the medium to long-term view, to justify weakening civil liberties protections.

The only reason we can have this argument at all is because the four-digit passcode protecting the information on the phone is so weak that the F.B.I. could easily test all 10,000 possibilities, if Apple provided a version of the operating system that let them do it (the normal operating system will introduce increasing delays after a couple of failed attempts and erase the phone after 10 failed attempts).

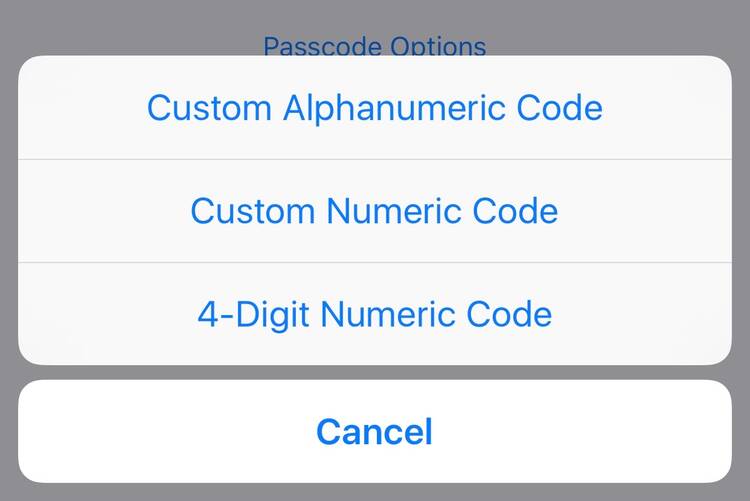

But the phone already could have been configured differently. As can yours, right now. Four-digit iPhone passcodes were the default option, and now six-digit passcodes are the norm, but Apple also provides the ability to choose a longer numeric passcode or an alphanumeric passcode—arbitrarily long, using the full range of characters available. If that sort of passcode had been enabled, it wouldn’t matter how quickly the F.B.I. could try all available passcodes; there are simply too many to get through them all in any useful timeframe. A 10-digit passcode would require up to 25 years for exhaustive testing, even with Apple’s full cooperation, and even relatively short alphanumeric passcodes can match this complexity.

To sum up the present state of affairs: effectively unbreakable encryption is possible, and already exists.

Given that reality, I think the second version of the question, focusing on whether Apple should be compelled to produce a broken, hackable version of its operating system, becomes more important. It’s simply not possible to guarantee that the government can always crack into phones belonging to the “bad guys.” That’s not because Apple or anyone else is acting in bad faith; it’s not because of any political decision whatsoever. It’s simply the case, given the mathematical facts of encryption, that it’s possible to make encryption harder to crack than we have time or computing resources available to attack it.

Since that’s the world we live in, I’d prefer to conserve our existing civil liberties rather than weakening them to achieve a small gain in security that will evaporate quickly. The F.B.I. wants to find out who else the San Bernardino shooters may have been in contact with, and whether or not there were other plans in motion for other attacks. Hacking into this phone may help answer some of those questions—but only if that data wasn’t more carefully encrypted or deleted in the first place. Going forward, such access will become less and less valuable as people with something to hide take advantage of the very simple steps that make encryption too complex to attack with brute force methods.

Given that, I’d prefer the security of knowing that Apple hasn’t deliberately broken the software protections in iOS to the potential security of whatever information the F.B.I may discover on this particular iPhone. And looking forward, I am more willing to accept the risks of encryption the government can’t break than the risks involved in requiring law-abiding companies to put backdoors into their software, and hoping that bad actors don’t learn how to exploit them.